Deep Meditations: A brief history of almost everything (2018)

“It feels like the machine is trying to tell me something…” – audience member

Deep Meditations: A brief history of almost everything in 60 minutes is a large-scale video and sound installation; a multi-channel, one hour abstract film; a monument that celebrates life, nature, the universe and our subjective experience of it. The work invites us on a spiritual journey through slow, meditative, continuously evolving images and sounds, told through the imagination of a deep artificial neural network.

We are invited to acknowledge and appreciate the role we play as humans as part of a complex ecosystem heavily dependent on the balanced co-existence of many components. The work embraces and celebrates the interconnectedness of all human, non-human, living and non living things across many scales of time and space – from microbes to galaxies.

Installation view: 5 channel ‘Monolith’ version

“Immaterial/Re-material: A Brief History of Computing Art”

UCCA Center for Contemporary Art, Beijing, CN (2020)

Installation view: 3 channel ‘Landscape’ version

Sonar+D, Barcelona, Spain (2019)

“Cybernetic Consciousness”, Itau Cultural, Sao Paulo, Brazil (2019)

Installation view: 7 channel Gallery version

“Thin as Thorns, In These Thoughts in Us: An Exhibition of Creative AI and Generative Art”

Honor Fraser Gallery, Los Angeles, CA, USA (2020)

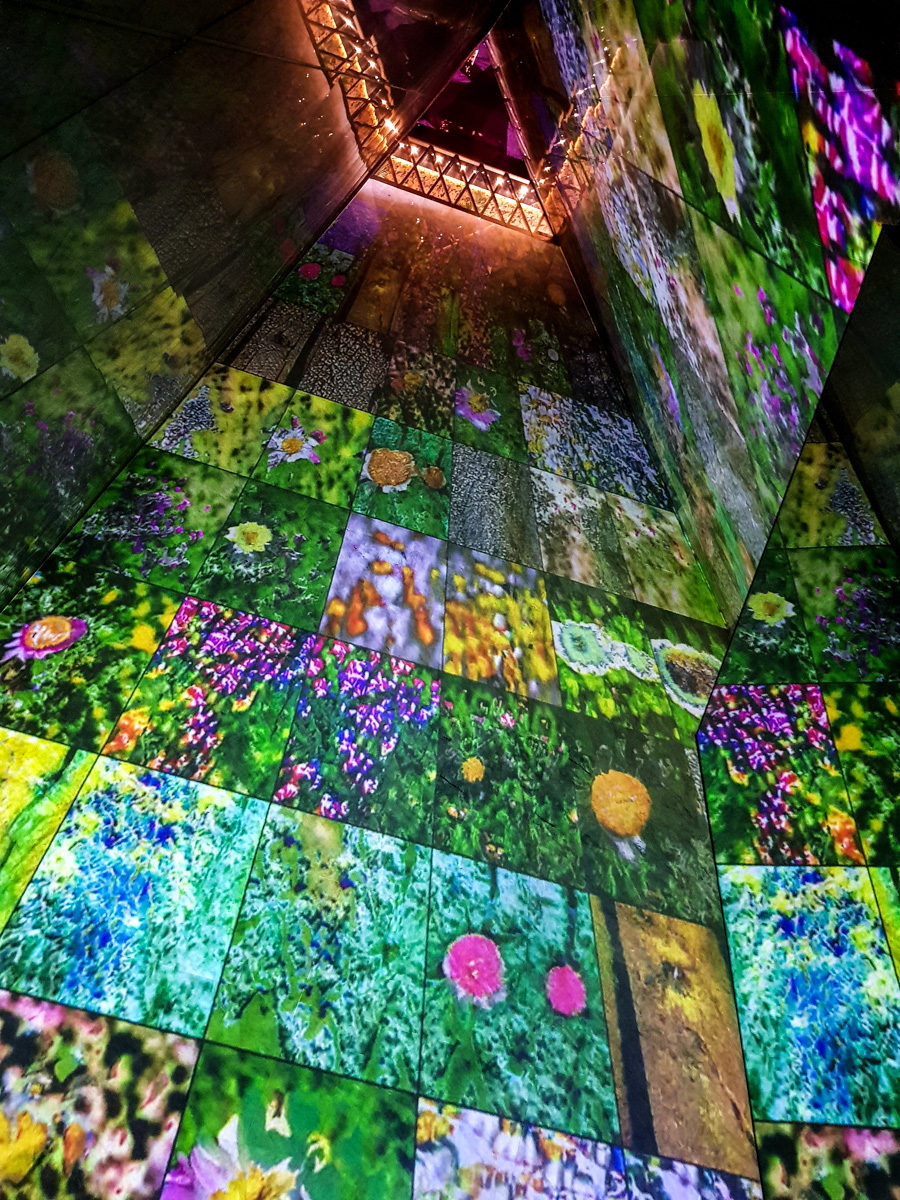

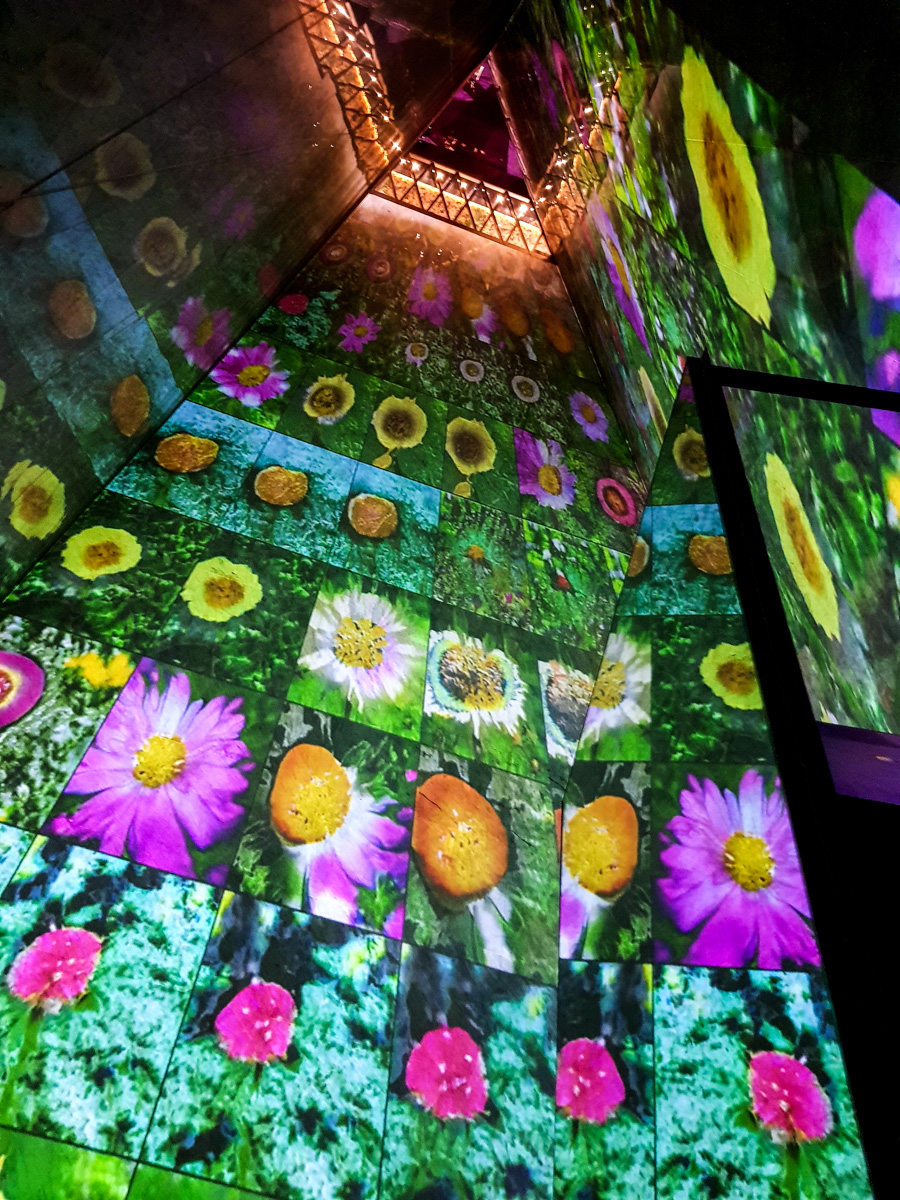

Installation view: Site specific ME by Melia, London

Foster+Partners’ ME by Melia 9-story high atrium, London, UK

(as part of Sonar+D London, 2019)

Installation view: Site specific Kennin-ji Temple, Kyoto

Kennin-ji Temple (Japan’s oldest Zen Buddhist Temple), Kyoto, JP (2019)

Excerpts

Short excerpts from the 60 minute film.

Teasers

The narrative of the 60 minute film sped up and simplified to fit into a few minutes.

in 5 minutes

in 2 minutes

Information

2018

Immersive, large-scale video and sound installation.

3 or 5 channel 4K video. Stereo audio.

Duration: 60 minute seamless loop.

Technique: Custom software, generative video, generative audio, Artificial Intelligence, Machine Learning, Deep Learning, Generative Adversarial Networks, Variational Autoencoders.

Arguably the world’s first film constructed in and told entirely through the latent space of a Deep Neural Network, with meaningful human control over narrative.

Update: To see studies for a new (2020) incarnation please see here.

“It feels like the machine is trying to tell me something…” – audience member

Deep Meditations: A brief history of almost everything in 60 minutes is a large-scale video and sound installation; a multi-channel, one hour abstract film; a monument that celebrates life, nature, the universe and our subjective experience of it. The work invites us on a spiritual journey through slow, meditative, continuously evolving images and sounds, told through the imagination of a deep artificial neural network.

We are invited to acknowledge and appreciate the role we play as humans as part of a complex ecosystem heavily dependent on the balanced co-existence of many components. The work embraces and celebrates the interconnectedness of all human, non-human, living and non living things across many scales of time and space – from microbes to galaxies.

What does love look like? What does faith look like? Or ritual? worship? What does God look like? Could we teach a machine about these very abstract, subjectively human concepts? As they have no clearly defined, objective visual representations, an artificial neural network is instead trained on our subjective experiences of them, specifically, on what the keepers of our collective consciousness thinks they look like, archived by our new overseers in the cloud. Hundreds of thousands of images were scraped (i.e. autonomously downloaded by a script) from the photo sharing website flickr, tagged with these words (and many more*) to train the neural network. The images seen in the final work are not the images downloaded, but are generated from scratch from the fragments of memories in the depths of the neural network. Sound is generated by another artificial neural network trained on hours of religious and spiritual chants, prayers and rituals from around the world, scraped from YouTube.

The abstract narrative of the film takes us through the birth of the cosmos, formation of the planets and earth, rocks, and seas, the spark of life, evolution, diversity, geological changes, formation of ecosystems, the birth of humanity, civilization, settlements, culture, history, war, art, ritual, worship, religion, science, technology.

“We are all connected. To each other, biologically. To the earth, chemically. To the rest of the universe atomically.”

– Neil deGrasse Tyson

“The cosmos is within us. We are made of star-stuff. We are a way for the universe to know itself.”

– Carl Sagan

“How can we think in times of urgencies without the self-indulgent and self-fulfilling myths of apocalypse, when every fiber of our being is interlaced, even complicit, in the webs of processes that must somehow be engaged and repatterned? Recursively, whether we asked for it or not, the pattern is in our hands.

…

It matters what ideas we use to think other ideas with. It matters what matters we use to think other matters with. It matters what knots knot knots.

It matters what thoughts think thoughts. It matters what worlds world worlds”

– Donna Haraway

Meaning

So the film really is a brief history of almost everything. But of course, ultimately, what you see depends on who you are. The piece is intended for both introspection and self-reflection, as a mirror to ourselves, our own mind and how we make sense of what we see; and also as a window into the mind of the machine, as it tries to make sense of its observations and memories. But there is no clear distinction between the two, for the very act of looking through this window projects ourselves through it. As we look upon the abstract, slowly evolving images conjured up by the neural network, we complete this loop of meaning making, we project ourselves back onto them, we invent stories based on what we know and believe, because we see things not as they are, but as we are. And we complete the loop.

Data

* The artificial neural network is trained on images tagged with the words everything, life, love, art, faith, ritual, worship, god, nature, universe, cosmos (and many more) scraped from the photo sharing website Flickr. However, given such a diverse dataset, the artificial neural network is not given any labels regarding the data it is exposed to. It is not provided the semantic information required to be able to distinguish between different categories of images (or sounds), between small or large; microscopic or galactic; organic or human-made. Without any of this semantic context, the network analyses and learns purely on surface aesthetics. Swarms of bacteria blend with clouds of nebula; oceanic waves become mountains; flowers become sunsets; blood cells become technical illustrations.

Panels

The multiple panels of video represent related but slightly varied journeys, happening simultaneously. These journeys affect each other, but they have different characteristics. Some are slower and steadier, focusing on more long term goals, while others are quicker, more exploratory, curious, seeking shorter term rewards. Other channels represent the same journeys, but at different points of the networks training history, shedding light on how slightly more exposure to certain types of stimulus can cause the network to reinterpret the same inputs as visually and compositionally related, but with semantically vastly varying results. All of the journeys are still somehow in sync, branching and spiralling around each other.

Latent Storytelling & Narrative

The work is also an exploration into the use of Deep Generative Neural Networks as a medium for creative expression and story telling with meaningful human control over narrative. While the explorations of these so-called ‘latent spaces’ in generative neural networks are typically random, here, precise journeys are carefully constructed in these high dimensional spaces to create a narrative.

Research

I presented some of the techniques used for the creation of this work at the 2nd Workshop on Machine Learning for Creativity and Design at the Neural Information Processing Systems (NeurIPS) 2018 conference (one of the most prestigious academic conferences on artificial intelligence).

More information can be found here.

paper: https://nips2018creativity.github.io/doc/Deep_Meditations.pdf

Related Work

- Deep Meditations (2018)

- Deep Meditations: Morphosis (2019)

- Deep Meditations: Abiogenesis (2020)

- Deep Meditations: We are all connected studies (2020)

- Deeper Meditations (2021)

- BigGAN studies (2018)

Acknowledgments

Created during my PhD, funded by the EPSRC.

Visual network is a ProGAN.

Audio network is a custom architecture: Grannma MagNet.

Amongst many people, I’d like to especially thank Nina Miolane and Sylvain Calinon for their contributions and help with Riemannian Geometry – and particularly Miolane et al for geomstats.