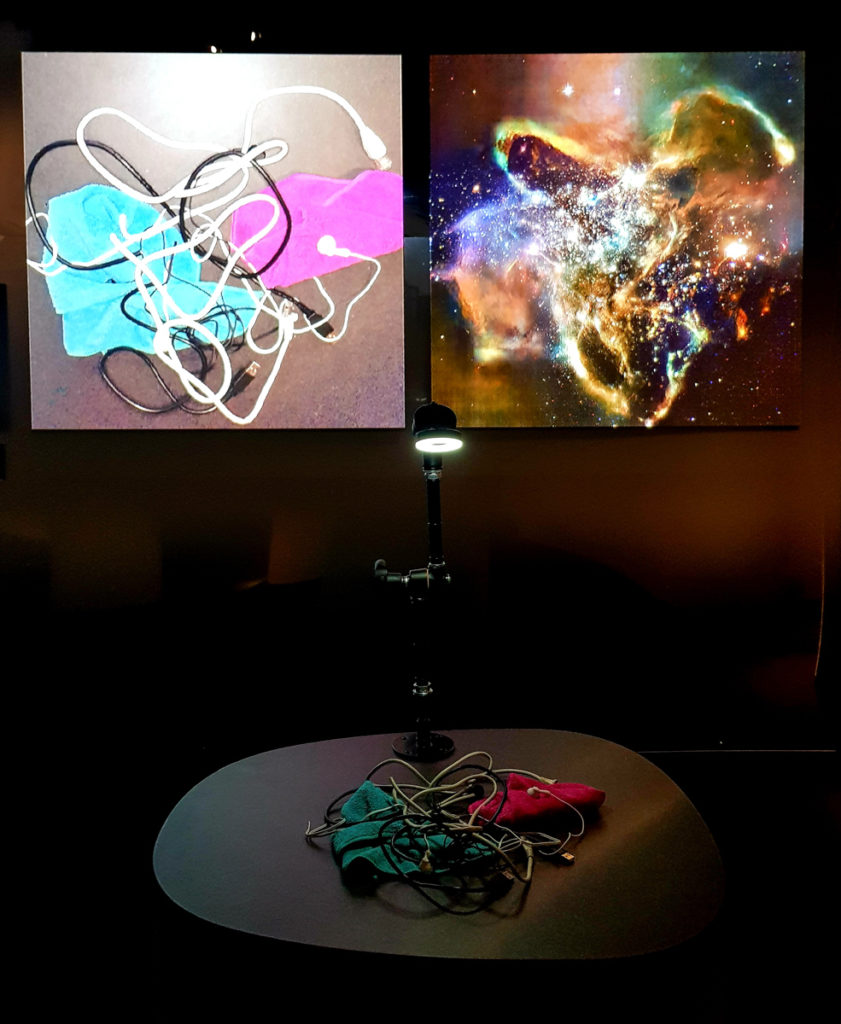

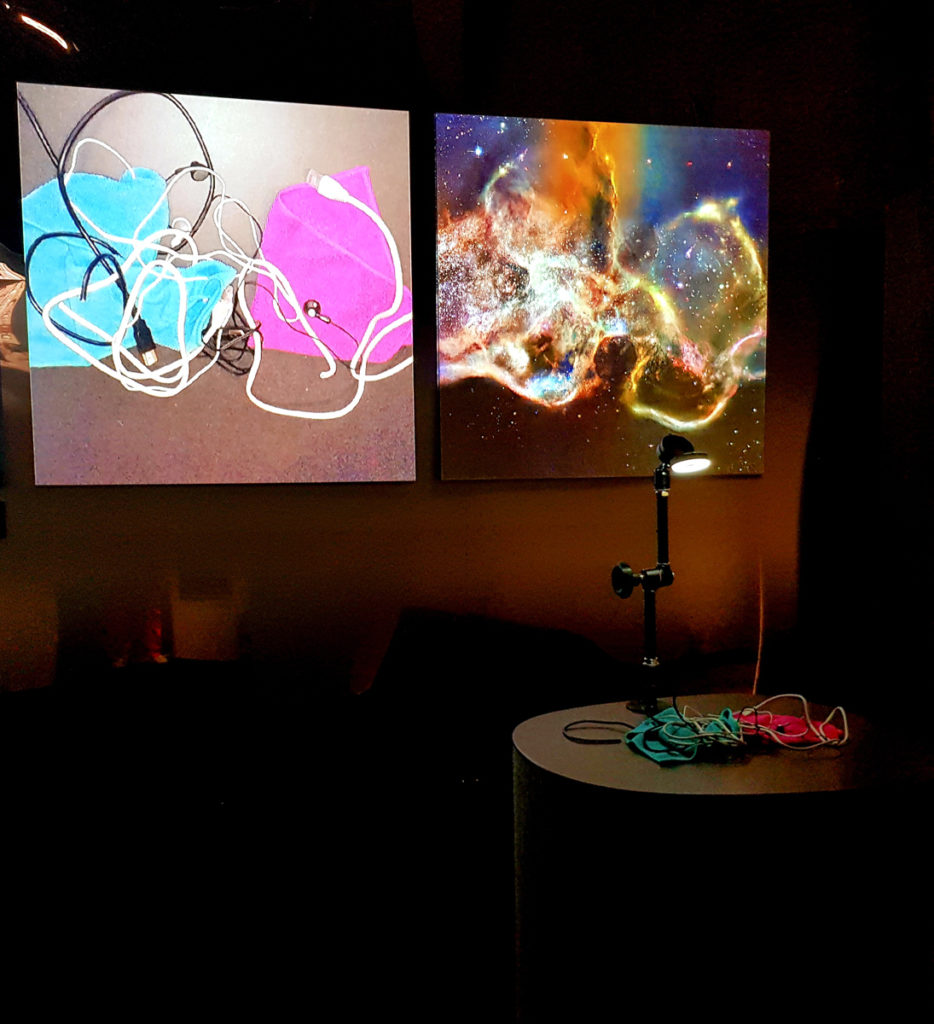

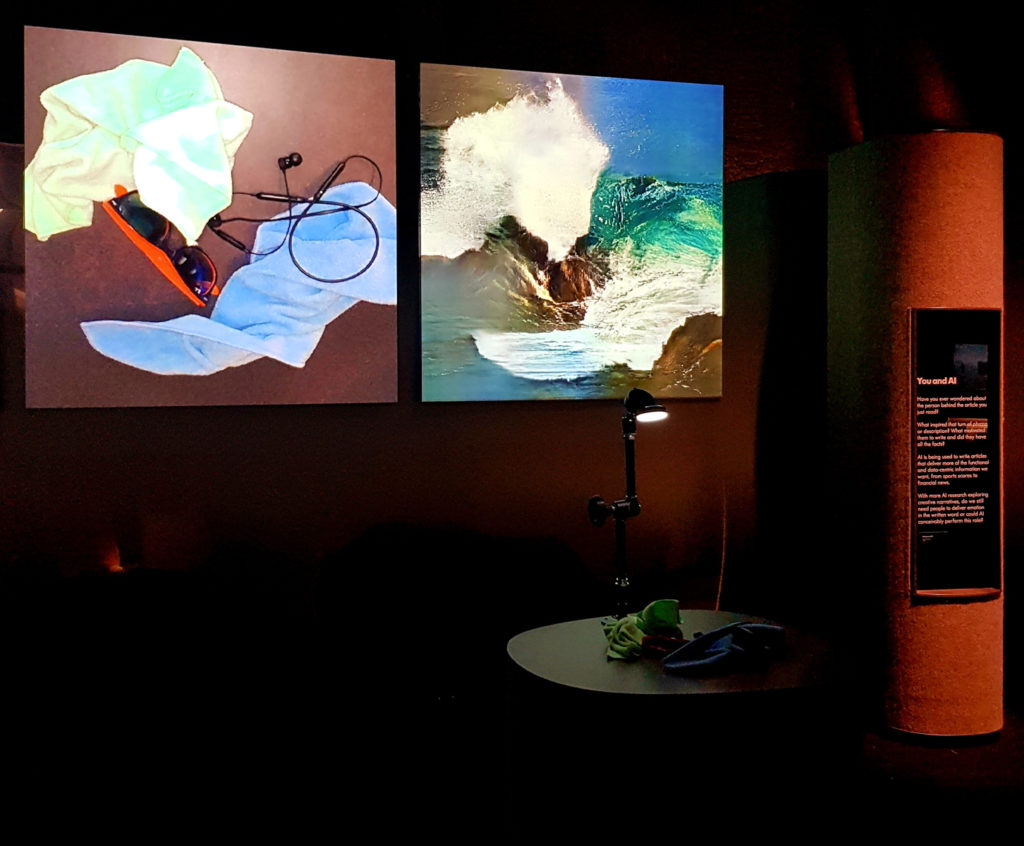

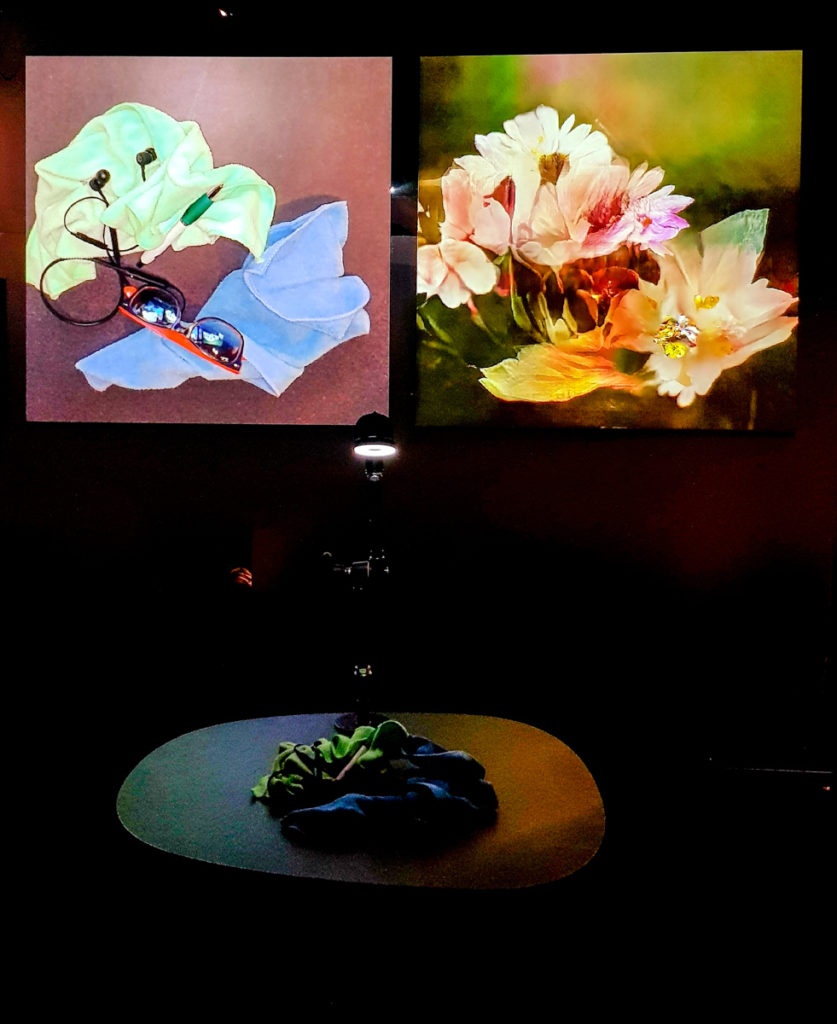

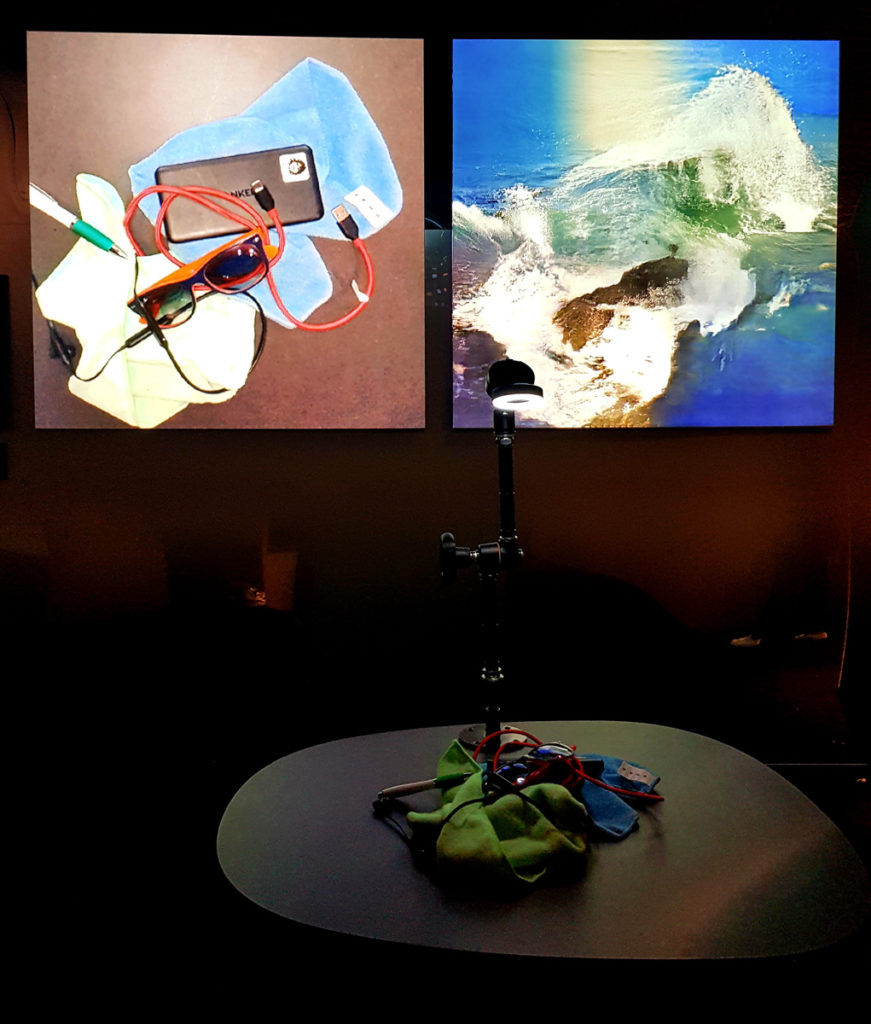

Learning to see: Interactive (2017)

2017. Materials: Custom software, PC, camera, projection, cables, cloth, wires. Technique: Custom software, Artificial Intelligence, Machine Learning, Deep Learning, Generative Adversarial Networks.

Part of the Learning to See series. Please see the series project page for more information.

I also presented a paper on this work at SIGGraph 2019. And for a much deeper conceptual and technical analysis, please see Chapter 5 of my PhD Thesis.

Installation view at “AI: More than Human”, The Barbican, London, UK, 2019

This particular edition is an interactive installation in which a number of neural networks analyse a live camera feed pointing at a table covered in everyday objects. Through a very tactile, hands-on experience, the audience can manipulate the objects on the table with their hands, and see corresponding scenery emerging on the display, in realtime, reinterpreted by the neural networks. Every 30 seconds the scene changes between different networks trained on five different datasets: (the four natural elements:) ocean & waves (representing ‘water’), clouds & sky (representing ‘air’), fire, flowers (representing earth, and life); and images from the Hubble Space telescope (representing the universe, cosmos, quintessence, aether, the void, the home of God). The interaction can be a very short, quick, playful experience. Or the audience can spend hours, meticulously crafting their perfect nebula, or shaping their favourite waves, or arranging a beautiful bouquet.

See Learning to See series for more information.