Mocap2Web (2012)

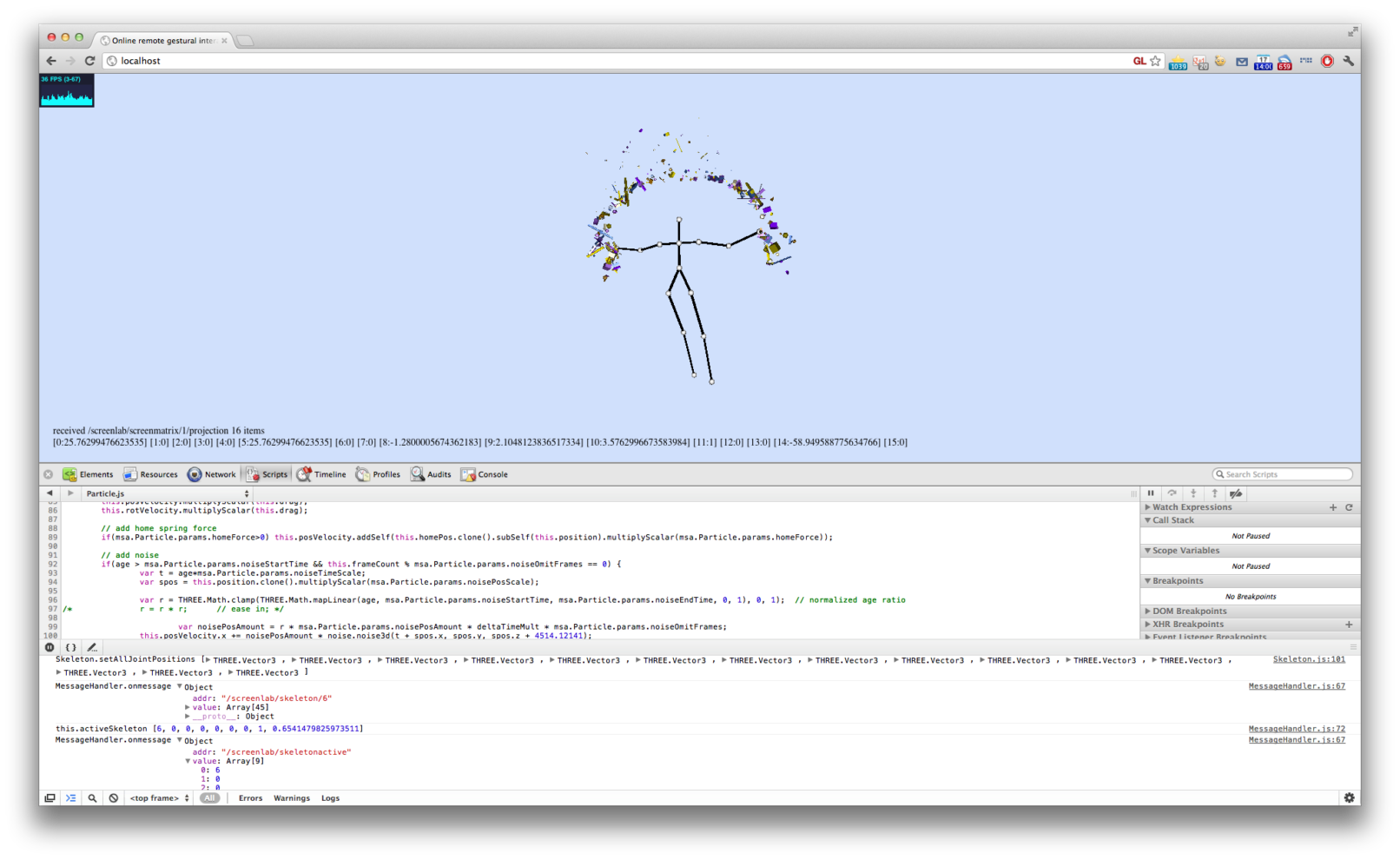

Research and demo to stream live motion capture data of performer(s) to the web for abstract visualisation and playful interaction in the browser.

This is a simple demo developed during a 5-day residency and lays the foundations to encourage the vision of creating FORMS-like abstract visualisations of performances, in realtime, playfully interactive, globally telematic and massively participatory.

One (or more) performers (e.g. musicians, singers, dancers etc) can be located in different locations around the world. Instead of (or in addition to) filming and streaming their video to the web, their skeletal pose information is motion captured (e.g using MS Kinect) and streamed to the web in realtime. Via a specially developed website, the data is received and visualised in abstract ways with playful interaction.

Source code

github.com/memo/TornadoOSCWSStream

github.com/elliotwoods/Screenlab-0x01

License

Released under the MIT License

Acknowledgements

Created during a 5 day residency at ScreenLab 0x01 at MediaCityUK, with the University of Salford, hosted by Elliot Woods and Kit Turner.

Web browser visualisation uses threejs.

Backend based on demo by Chris Allick

github.com/chrisallick/TornadoOSCWSStream